Watch out, cheaters—AI detectors are here to catch you and your chatbot red-handed.

Or, at least, that’s what AI developers use as a selling point and want us to believe. When ChatGPT entered the cultural zeitgeist in 2022, teachers and professors balked at the surge in AI-generated research papers and homework. To curb the use of AI in the classroom, educators have been using AI detectors that claim to distinguish AI-written text from human-written text.

But how accurate are these tools? According to Christopher Penn, Chief Data Scientist at Boston-based marketing analytics firm Trust Insights, “AI detectors are a joke.” One AI detector he tested claimed that 97.75% of the preamble to the U.S. Declaration of Independence was AI-generated.

“What led me to the testing of AI detectors was seeing colleagues battling back and forth, arguing about whether a piece of content was AI-generated,” Penn told Decrypt. “I saw this on LinkedIn; some people were lobbing accusations against each other that so-and-so was being a lazy marketer, taking the easy way out, and just using AI.”

Fighting words? Perhaps. Said Penn: “We should probably test that to understand whether or not this is actually true.”

Penn decided to test several AI detectors using the Declaration of Independence, and was dismayed by what he found: “I think they’re dangerous,” he said of such detectors. “They are unsophisticated and harmful.”

“These tools are being used to do things like disqualify students, putting them on academic probation or suspension,” he said. That’s “a very high-risk application when, in the United States, a college education is tens of thousands of dollars a year.”

We decided to do a test of our own to see how these sites did. In the first, we used the same excerpt Penn used from the Declaration of Independence to determine which detectors erroneously believed the text was AI-generated. For the second test, we took an excerpt from E.M. Forrester’s 1909 science fiction short story “The Machine Stops” and had ChatGPT rewrite it to see which detector identified the passage as AI written. Here are our results:

Taking the same text Penn used, we compared several AI detectors: Grammarly, GPTZero, QuillBot, and ZeroGPT, the AI detector Penn showed in his LinkedIn post.

BEST TO WORST: Detecting human-written text

- Grammarly. Of the four we tested, Grammarly performed best in detecting human and AI-generated text. It even reminded me to cite my work.

- Quillbot’s AI detector also identified the Declaration text as being “Human-written 100%.”

- GPTZero gave the Declaration of Independence an 89% probability of being written by humans.

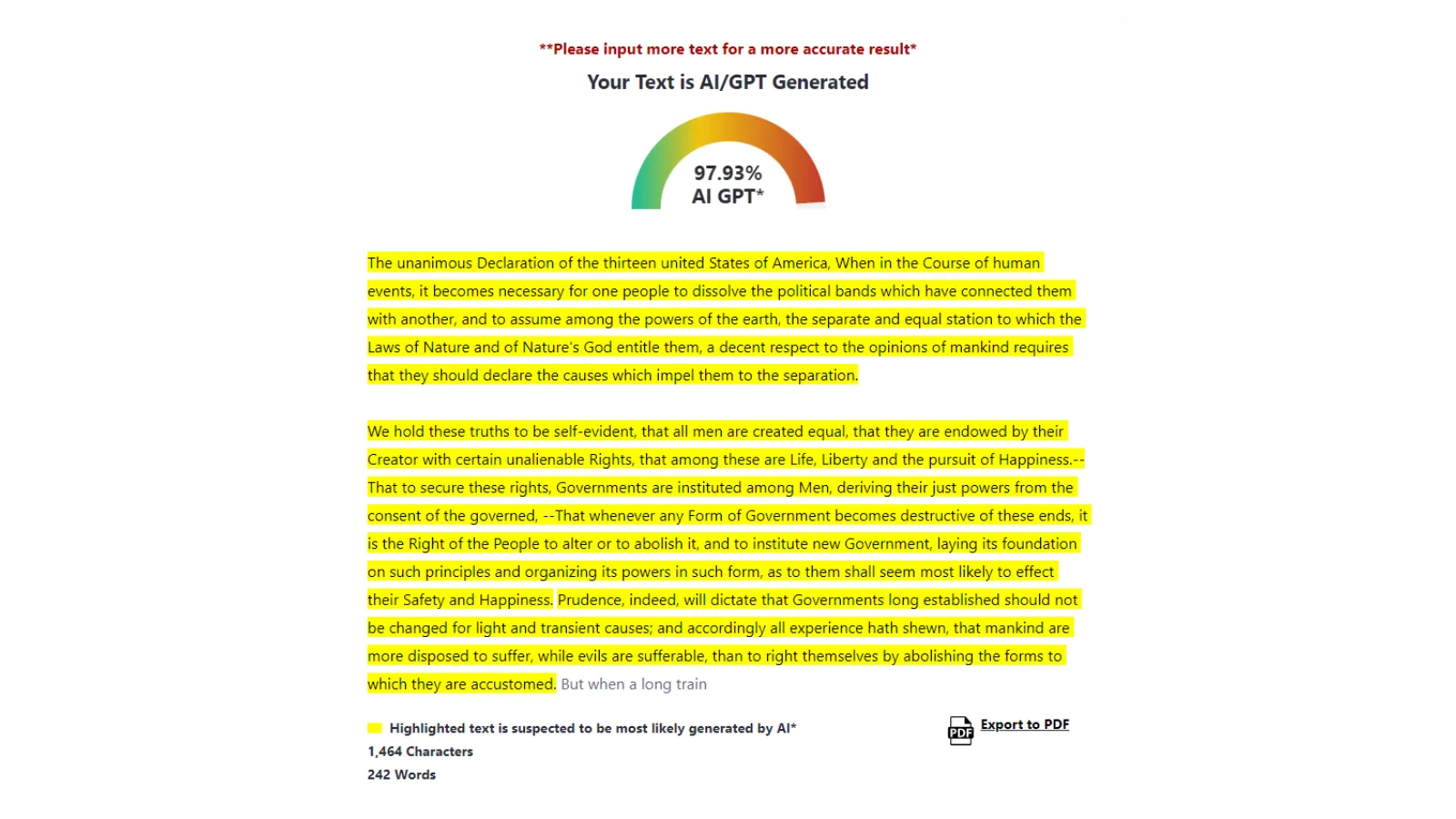

- ZeroGPT totally boffed it and said the Declaration of Independence text was 97.93% AI-generated—even higher than Penn’s findings earlier this month.

In this next test, we ran “The Machine Stops” through ChatGPT-4o to rewrite the text to see if the AI detectors could spot the fake writing.

BEST TO WORST: Detecting AI-written text

- Grammarly was the most effective in detecting AI-generated content when comparing “The Machine Stops” with its AI version.

- GPTZero identified the original story as 97% likely human-written, and the AI version as 95% AI-generated.

- Quillbot was unable to tell the difference between human and AI text, giving both a 0% probability.

- ZeroGPT identified “The Machine Stops” text as likely human-written with a 4.27% probability. but mistakenly labeled the AI-generated version as human-written with a 6.35% probability.

“Grammarly continues to deepen its expertise in evaluating text originality and responsible AI use,” a Grammarly spokesperson told Decrypt, pointing to a company post about its AI detection software.

“We’re adding AI detection to our originality features as part of our commitment to responsible AI use,” the company said. “We’re prioritizing giving our users, particularly students, as much transparent information as possible, even though the technology has inherent limitations.”

The Grammarly spokesperson also highlighted the company’s latest update, Grammarly Authorship, a Google Chrome extension that enables users to demonstrate which parts of a document were human-created, AI-generated, or AI-edited.

“We would recommend against using AI detection results to directly discipline students,” GPTZero CTO Alex Cui told Decrypt. “I think it’s useful as a diagnostic tool, but requires our authorship tools for a real solution.”

Like Grammarly, GPTZero features an “authorship” tool that Cui recommends be used to verify that future content submissions are written by humans.

“Our writing reports in Google Docs and our own editor analyze the typing patterns on a document to see if the document was human written, and massively reduce the risk of incorrect conclusions,” he said.

Cui emphasized the importance of continually training the AI model on a diverse dataset.

“We use large natural language processing (NLP) and machine learning models trained on a dataset of millions of AI and human-generated documents, and are tested to have low error before release,” he said. “We tuned our detector to have less than 1% false-positive rates before launching in general to lower the risk of false positives.”

Penn pointed out that blindly relying on AI detectors to find plagiarism and cheating is just as dangerous as relying on AI to write a fact-based report.

“My caution to anyone thinking about using these tools is that they have unacceptably high false-positive rates for any mission-critical or high-risk application,” Penn said. “The false-positive rate—if you’re going to kick someone out of college or revoke their doctoral degree—has to be zero. Period. End of story. If institutions did that rigorous testing, they would quickly find out there’s not a single tool on the market they could buy. But that’s what needs to happen.”

Thankfully, only 5% of this article came back as AI-generated.

ZeroGPT, and Quillbot did not immediately respond to requests for comment.

Edited by Andrew Hayward